The Instructional Design Process Concludes With: A Comprehensive Overview

The final stage centers on evaluation, ensuring program effectiveness and accountability through detailed analysis, reports, and reflective documentation of outcomes and areas for growth.

The concluding phase of instructional design is critically focused on evaluation – a systematic process to determine the merit, worth, and significance of the learning experience. This isn’t merely a post-implementation check; it’s woven throughout, beginning with formative assessments during development. The ultimate aim is to ascertain if the instructional program effectively achieves its stated goals and objectives.

This final stage demands a shift in perspective, moving from creation to critical appraisal. It requires gathering data, analyzing results, and drawing actionable conclusions. Successfully navigating this phase ensures accountability and provides valuable insights for future instructional endeavors, fostering continuous improvement and maximizing learning impact.

The Core Goal: Evaluation in Instructional Design

Evaluation’s central purpose within the instructional design process is twofold: verifying program effectiveness and establishing accountability for achieving promised outcomes. It’s about rigorously determining if learners acquired the intended knowledge and skills, and if the instructional materials resonated with the target audience’s needs.

This isn’t simply about ticking boxes; it’s a deep dive into whether the design successfully facilitated learning. Evaluation provides concrete evidence to support decisions, justify investments, and identify areas for refinement. Ultimately, it ensures that instructional efforts translate into measurable, positive impacts on learner performance and organizational goals.

Formative Evaluation: Ongoing Refinement

Formative evaluation is a continuous process woven throughout development, focused on identifying potential issues early and iteratively improving the design. It’s about proactively seeking feedback – through focus groups and expert reviews – to adjust the course before widespread implementation.

This stage isn’t about judgment, but about gathering data to refine alignment with desired learning outcomes. Pilot testing plays a crucial role, allowing designers to observe learner interactions and pinpoint areas needing clarification or modification. The goal is to ensure the instructional materials are clear, engaging, and effectively support the learning journey, leading to a polished final product.

Focus Groups and Learner Feedback

Gathering direct input from learners is paramount during the evaluation phase, and focus groups provide a valuable platform for this. These moderated discussions allow designers to observe reactions, understand challenges, and uncover unexpected insights regarding the instructional materials.

Learner feedback, collected through surveys or interviews, offers quantitative and qualitative data on the learning experience. This feedback illuminates areas where content resonates and where it falls short, guiding necessary adjustments; Analyzing this data ensures the design truly meets the needs of the target audience, fostering engagement and maximizing learning effectiveness.

Expert Reviews and Design Adjustments

Complementing learner feedback, expert reviews offer a critical lens on the instructional design’s technical soundness and pedagogical approach. Subject matter experts assess content accuracy, while instructional design specialists evaluate alignment with learning principles and best practices.

These reviews pinpoint potential issues – clarity, consistency, or accessibility – often missed during internal development. Based on expert recommendations, designers implement targeted adjustments to refine the materials. This iterative process ensures the final product is not only engaging but also demonstrably effective in achieving stated learning objectives, ultimately enhancing the overall quality.

Pilot Testing and Iterative Improvement

Pilot testing introduces the instructional materials to a representative sample of the target audience, providing real-world insights into their usability and effectiveness. This controlled environment allows for observation of learner interactions and collection of valuable feedback before full-scale implementation.

Data gathered from pilot testing informs iterative improvements – modifications to content, activities, or delivery methods – addressing identified challenges. This cyclical process of testing, analyzing, and refining ensures the final product is optimized for learner success, maximizing engagement and knowledge retention. It’s a crucial step towards a polished, impactful learning experience.

Summative Evaluation: Assessing Overall Effectiveness

Summative evaluation represents the final assessment of the instructional project, determining if it successfully achieved its intended learning objectives and met the needs of the target audience. This comprehensive review occurs after implementation, providing a holistic view of the program’s impact.

It examines whether promised goals were met, holding responsible parties accountable. Methods include project debriefings, detailed evaluation reports, and reflective documentation. The focus is on demonstrating value and justifying resources invested. Ultimately, summative evaluation confirms whether the instructional design effectively delivered the desired outcomes and contributed to overall program success.

Defining Instructional Effectiveness

Instructional effectiveness, at the conclusion of the design process, is fundamentally about achieving pre-defined learning outcomes and aligning with the specific needs of the intended audience. It’s not merely about completion, but demonstrable change in knowledge, skills, or attitudes.

Effectiveness is measured by whether the instructional materials successfully taught the intended concepts and whether learners can apply that knowledge. This requires a clear understanding of initial goals and objectives, serving as benchmarks for success. A truly effective program delivers measurable results and provides a return on investment, proving its value.

Key Metrics for Summative Evaluation

Summative evaluation relies on key metrics to determine overall program success, focusing on achievement of learning objectives and alignment with audience needs. These metrics include pre- and post-test scores, demonstrating knowledge gain, and completion rates, indicating engagement.

Furthermore, performance-based assessments reveal practical application of learned skills. Stakeholder satisfaction surveys provide valuable qualitative data. Analyzing these metrics allows for a comprehensive assessment of instructional impact. Ultimately, the goal is to determine if the program delivered the promised results and met its intended purpose, justifying the investment and effort.

Achievement of Learning Objectives

A core component of summative evaluation is verifying whether learners actually achieved the stated learning objectives. This is typically measured through assessments designed to directly evaluate mastery of the intended skills and knowledge.

Analyzing assessment data reveals the extent to which objectives were met, identifying areas of strength and weakness. Did learners demonstrate the ability to apply concepts in real-world scenarios? Were the objectives realistic and measurable? Successful achievement signifies effective instructional design, while gaps indicate a need for revision and improvement in future iterations of the program.

Alignment with Target Audience Needs

Evaluating alignment with the target audience is crucial; a successful program resonates with learners’ existing knowledge, skills, and motivations. Summative evaluation assesses whether the instructional materials and delivery methods were appropriate for the intended demographic.

Consider factors like cultural relevance, accessibility, and learning preferences. Did the program address the specific needs and challenges faced by the learners? Feedback from learners, gathered through surveys and focus groups, provides valuable insights into this alignment. A strong match between content and audience ensures engagement and maximizes learning outcomes, demonstrating effective instructional design.

Evaluation Methods and Tools

A diverse toolkit supports thorough evaluation, ranging from quantitative data analysis to qualitative feedback gathering. Project debriefings offer structured discussions to capture insights from stakeholders. Evaluation reports consolidate findings, presenting key metrics and observations.

Tools include surveys, questionnaires, and learning analytics platforms to measure achievement of learning objectives. Focus groups and expert reviews provide nuanced perspectives. Reflective documentation encourages designers to critically assess the process. Utilizing a combination of methods ensures a comprehensive understanding of program effectiveness, leading to actionable improvements and informed future designs.

Project Debriefings

Structured project debriefings are crucial for capturing collective insights following implementation. These sessions bring together instructional designers, subject matter experts, and key stakeholders to discuss what worked well and identify areas needing improvement.

Facilitated discussions focus on alignment with initial goals, achievement of learning objectives, and the overall learner experience. Debriefings provide a safe space for honest feedback, fostering a culture of continuous improvement. Documenting key takeaways from these meetings informs future design decisions and ensures lessons learned are not lost, ultimately enhancing program effectiveness.

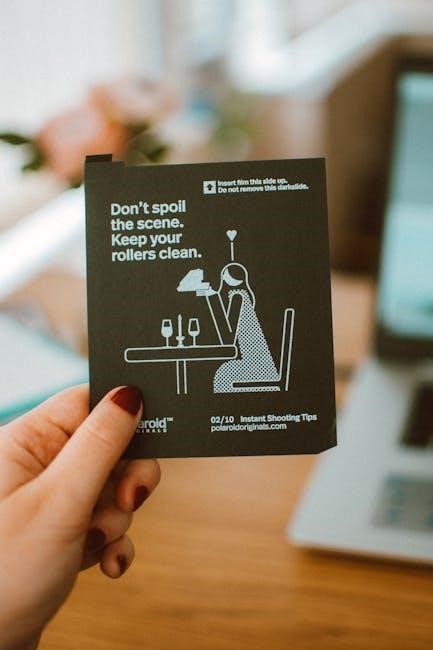

Evaluation Reports

Comprehensive evaluation reports formally document the findings of the assessment process. These reports synthesize data from various sources – formative and summative evaluations, pilot testing, and project debriefings – presenting a clear picture of instructional effectiveness.

Reports detail the achievement of learning objectives, alignment with target audience needs, and any identified areas for improvement. They should include key metrics, supporting evidence, and actionable recommendations. Well-structured reports serve as a valuable resource for stakeholders, demonstrating accountability and informing future instructional design initiatives, ensuring continuous program enhancement.

Reflective Documentation

Reflective documentation goes beyond simply reporting results; it captures the instructional designer’s critical analysis of the entire process. This involves a thorough examination of what worked well, what didn’t, and why.

It’s a space for honest self-assessment, detailing challenges encountered, decisions made, and lessons learned. This documentation isn’t just about positive outcomes, but also about acknowledging shortcomings and identifying opportunities for growth. It’s crucial for building a knowledge base and fostering continuous improvement in future instructional design endeavors, ensuring impactful learning experiences.

Documenting Positive Outcomes

Carefully documenting positive outcomes is a vital component of the final evaluation phase. This includes detailing instances where learning objectives were successfully met, and where the instructional materials resonated with the target audience.

Specific examples of learner success, positive feedback received during evaluations, and quantifiable improvements in performance should all be meticulously recorded. This evidence serves not only as a testament to the project’s effectiveness, but also as valuable data for future instructional design initiatives, showcasing best practices and successful strategies.

Identifying Areas for Improvement

Alongside documenting successes, a crucial aspect of the concluding phase involves pinpointing areas where the instructional design could be enhanced. This necessitates a candid assessment of challenges encountered during pilot testing, feedback received from learners and experts, and any discrepancies between intended and actual learning outcomes.

Identifying these areas isn’t about assigning blame, but rather about fostering a culture of continuous improvement. Detailed notes on shortcomings, suggestions for refinement, and potential roadblocks for future iterations are essential for optimizing the learning experience and maximizing program effectiveness.

Analysis and Reflection on Lessons Learned

Following documentation, a thorough analysis of collected data is paramount. This involves scrutinizing evaluation reports, learner feedback, and expert reviews to discern patterns and draw meaningful conclusions about the instructional design’s strengths and weaknesses. Reflection goes beyond simply identifying what went wrong or right; it requires understanding why.

Consider the impact of design choices on learner engagement, achievement of objectives, and overall program effectiveness. This introspective process transforms raw data into actionable insights, paving the way for informed decisions in future instructional design endeavors and ensuring continuous improvement.

The Importance of Actionable Insights

Evaluation’s true value lies not just in identifying outcomes, but in generating actionable insights. These insights must directly inform future design decisions, moving beyond simple observations to concrete recommendations for improvement. A detailed project debriefing, coupled with comprehensive evaluation reports, facilitates this process.

Effective insights pinpoint specific areas needing adjustment – whether in content delivery, assessment strategies, or alignment with learner needs. They empower instructional designers to refine their approach, ensuring subsequent iterations are more effective and contribute to achieving program goals and maintaining accountability.

Accountability and Program Goals

The concluding evaluation phase firmly establishes accountability for program success, directly linking outcomes to initially defined goals and objectives. This process determines whether promised results were achieved and if the instructional design effectively met the needs of the target audience.

Holding stakeholders responsible necessitates clear documentation of both positive outcomes and areas requiring improvement. This transparent approach ensures that lessons learned are not only identified but also actively integrated into future designs, fostering a culture of continuous improvement and responsible program management.

Instructional Materials and Content Evaluation

A critical component of the final evaluation involves a thorough assessment of the instructional materials themselves – books, films, and all supporting content. This scrutiny determines if the materials effectively facilitate learning and align with established objectives.

Evaluation focuses on clarity, accuracy, and engagement, ensuring content is accessible and supports diverse learning styles. Analyzing instructional definitions and their practical application is key. Ultimately, this step confirms whether the materials successfully deliver the intended learning experience and contribute to overall program effectiveness.

Understanding Instructional Definitions

A precise grasp of “instructional” is fundamental during the concluding evaluation phase. The term encompasses the act of teaching, the materials used, and the overall process of facilitating learning. Examining definitions – like those found in dictionaries – clarifies the intended purpose of the program’s components.

This understanding ensures alignment between the design, delivery, and the core goal of imparting knowledge or skills. Accurate interpretation of instructional objectives is vital for assessing whether the program truly instructs and achieves its stated aims, ultimately validating its effectiveness.

Linking Evaluation to Initial Goals and Objectives

The concluding evaluation must directly correlate to the initial goals and objectives established at the project’s outset. This linkage determines if the program delivered on its promises and achieved the intended learning outcomes. Were the original aims realistic and measurable?

A thorough review confirms whether the evaluation metrics accurately reflect those initial targets. Discrepancies highlight areas where the design faltered or where objectives require refinement in future iterations. This connection ensures accountability and validates the entire instructional design process, proving its worth.

Ensuring Program Effectiveness

Ultimately, the concluding evaluation phase aims to definitively establish program effectiveness. This isn’t merely about achieving learning objectives, but about confirming the instructional materials genuinely meet the target audience’s needs and contribute to tangible improvements.

Effective programs demonstrate a clear alignment between design, delivery, and desired results. Evaluation reports, reflective documentation, and project debriefings provide crucial insights. Identifying both positive outcomes and areas for improvement is vital for continuous refinement and maximizing the program’s impact, guaranteeing long-term success.

The instructional design process doesn’t truly conclude with evaluation; it cycles back into itself, fostering continuous improvement. Actionable insights gleaned from evaluation – documenting positive outcomes and pinpointing areas needing adjustment – are paramount.

Analysis and reflection on lessons learned aren’t simply post-project tasks, but foundational elements for future designs. Holding stakeholders accountable to initial goals, while embracing iterative refinement, ensures programs remain effective and relevant. This commitment to ongoing assessment and adaptation is the hallmark of successful instructional design.